Designing

with AI

Leveraging agentic workflows and AI-assisted design to accelerate product development and enhance user experience at LOOK.

Overview

LOOK is an early-stage beauty marketplace that helps makeup lovers find, explore, and book artists in an inspiring and intuitive way. As an early designer on the team, I explored how AI could power both internal workflows and user-facing features.

My focus was on using agentic workflows and LLM-powered systems to move faster as a small team, building smarter tools that made the platform easier to use, more scalable, and creatively aligned with our vision.

Key areas of focus included:

Smart Image Tagging for Makeup Artists – using AI to automatically analyze and label uploaded images, improving search accuracy and discovery

Agentic Workflows for Rapid Product Building – collaborating directly with AI agents to generate functional code and iterate on pages and components quickly

AI-Powered Personalization – designing recommendation systems that analyze user behavior and surface tailored content with clear, explainable logic

My Role

Product Designer

3 Months

Timeline

Tools

Figma, Lovable, OpenAI, Github

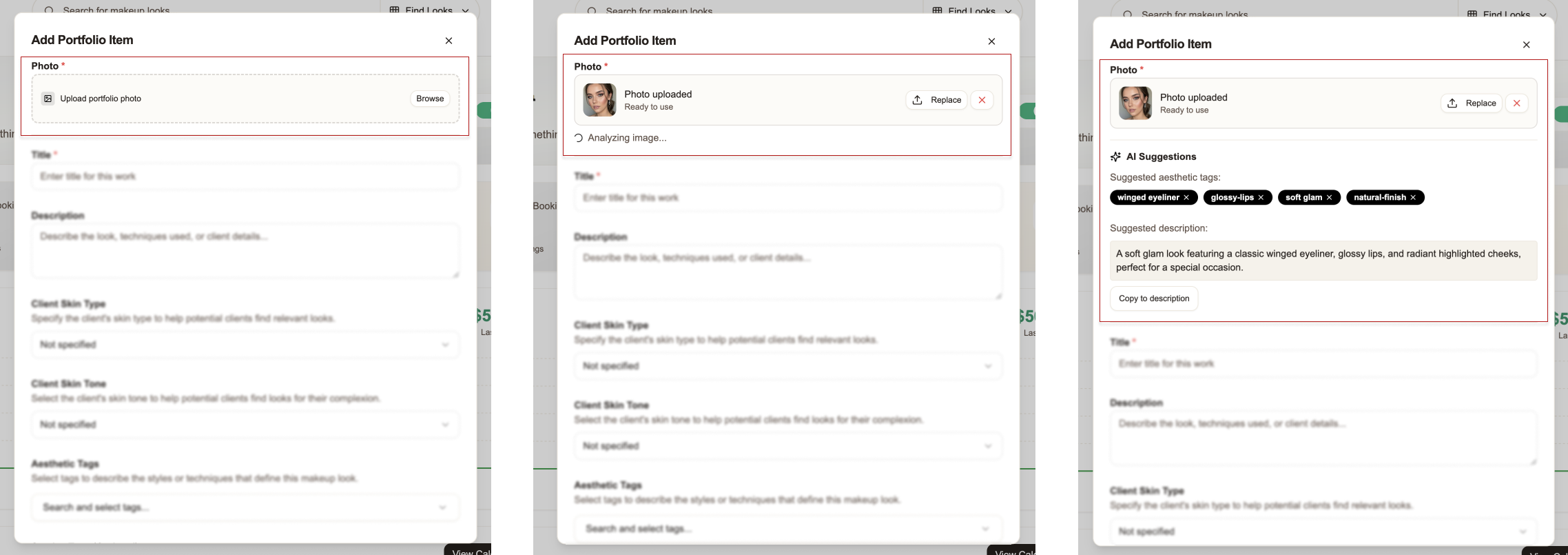

1. Smart Image Tagging for Makeup Artists

Problem

We needed a way for makeup artists to tag and organize their uploaded images without adding friction to the upload process. Manual tagging would have been time-consuming and inconsistent, limiting the accuracy of search results for users.

Solution

I designed a workflow where OpenAI’s LLM analyzed uploaded photos, detecting beauty-related attributes like skin finish, color palette, and style (e.g. “soft glam” or “editorial”).

The system generated 4–5 suggested tags to encourage artists to tag their images or rely on the AI’s recommendations. Artists could review, edit, or add to this list, maintaining creative control while saving time. At the same time, the AI generated 30–40 backend tags with detailed attributes (such as colors, seasons, occasions, and makeup techniques) to strengthen search relevance without adding complexity to the user experience.

Behind the scenes, an LLM trained on beauty-specific data made tagging more accurate while keeping the artist’s experience effortless.

My Role

Led UX design for the MUA upload and AI tag validation flow

Collaborated with engineers to train, test, and fine-tune the tagging model

Designed prompt structures and built feedback loops to improve LLM accuracy

Impact

Reduced manual tagging by over 80% in testing

Significantly improved the accuracy and speed of search results

Helped establish a scalable data structure for our beauty search engine

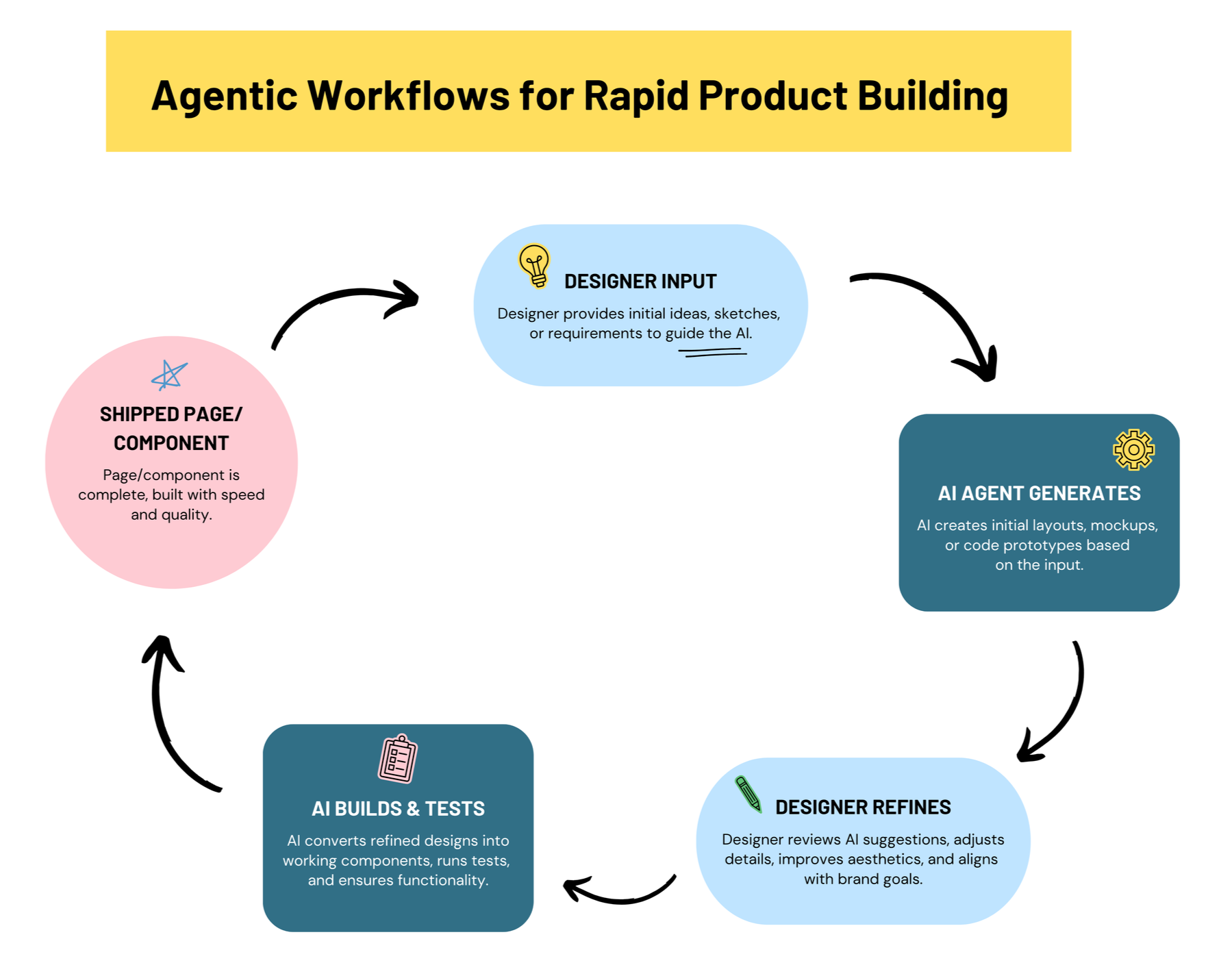

2. Agentic Workflows for Rapid Product Building

Problem

As a small startup team, we needed a faster way to design, test, and ship product updates — without sacrificing UX quality or depending entirely on engineering bandwidth.

Solution

Using Lovable, an AI-driven product builder powered by OpenAI, I collaborated with AI agents to generate functional code for pages and components.

This approach let me iterate rapidly between design and logic, maintaining control over UX/UI while accelerating development timelines.

My Role

Designed and led agentic workflows aligning UI, logic, and function

Collaborated directly with AI agents to generate, test, and refine live components

Integrated design assets into AI-built components to reduce iteration time

Partnered with engineers to validate and optimize AI-generated code

Impact

Reduced build timelines by about 5 months

Enabled our design team to contribute directly to coded prototypes

Established a repeatable workflow for AI-assisted feature development

An example of a response to a prompt to the AI agent

3. AI-Powered Personalization

Problem

Early feedback showed users felt overwhelmed by the volume of artists and looks. We needed a way to make discovery feel personal and inspiring, not just functional.

Solution

I designed an AI-powered recommendation system that analyzed user behavior (saved looks, favorited artists) to surface relevant creators and content.

Working closely with engineers, I co-led the design of how the OpenAI-powered model mapped aesthetic attributes to user preferences and how those results surfaced visually in the UI.

Each recommendation included transparent explanations (e.g., “Suggested because you saved similar soft-glam looks”) to build trust and make the AI logic clear.

My Role

Led UX design for AI-driven recommendation and personalization flows

Collaborated on data mapping and prompt design for style recognition

Created UI patterns for transparent, explainable AI recommendations

Prototyped and refined AI output quality using OpenAI tools during testing

Impact

Increased engagement with AI-driven content in early testing

Users responded positively to transparency in AI explanations

Built a scalable framework for personalization across future releases

(Due to IP I cannot share final visuals)

Outcome

Through these projects, I developed a strong foundation for integrating AI into design systems, not just as a tool, but as a creative collaborator. Across smart tagging, agentic workflows, and personalized recommendations, I worked hands-on with AI to solve both user-facing and internal product challenges.

While formal usability testing is upcoming, early VoC feedback has already informed feature flows, interaction patterns, and prioritization for the MVP, setting the stage for structured testing to validate improvements and guide further refinements as the marketplace grows.

These experiences reshaped how I approach design, blending logic, UX, and emerging technology to move faster, make smarter decisions, and build more scalable, intuitive products. The workflows and frameworks I created also set the stage for future AI-powered features and continuous iteration as the marketplace grows.